While preparing for my talk at TyphoonCon, about how to find bugs in the Linux kernel, I discovered a neat little vulnerability in the kernel's TIPC networking stack.

I found this while playing around with syzkaller as part of the research for my talk; I felt like it would only be fair to find some bugs to share if I'm doing a talk about it :)

I picked the TIPC protocol for a few reasons: it had low coverage, net surface is fun, it's not enabled by default (not out here trying to find critical RCEs for a slide example) plus I have some previous experience working with the protocol.

In this post I'm mainly going to be talking about the vulnerability itself, remediation and maybe I'll go a little bit into exploitation cos I can't help myself. If I can find the time, I'd love to do a future post talking more about the discovery process and exploitation.

Contents

Overview

The vulnerability allows a local, or remote attacker, to trigger a use-after-free in the TIPC networking stack on affected installations of the Linux kernel.

Only systems with the TIPC module built (CONFIG_TIPC=y/CONFIG_TIPC=m) and loaded are vulnerable. Additionally, in order to be vulnerable to a remote attack the system must have TIPC configured on an interface reachable by an attacker.

The flaw exists in the implementation of TIPC message fragment reassembly, specifically tipc_buf_append(). The function carries out the reassembly by chaining the fragmented packet buffers together. It takes the first fragment as the head buffer and then processes subsequent fragments sequentially, adding their packet buffers onto the head buffer's chain.

The vulnerability occurs due to a missing check in the error handling cleanup. On error, the reassembly will bail, freeing both the head buffer (and its chained buffers) and the latest fragment buffer currently being processed. If the latest fragment buffer has already been added to the head buffer's chain at this point, it will lead to a use-after-free.

The vulnerability was introduced in commit 1149557d64c9 (Mar 2015) and fixed in commit 080cbb890286 (May 2024), affecting kernel versions 4 through to 6.8.

It was assigned ZDI-24-821 and CVE-2024-36886 (shoutout to the insane description formatting on that one).

Timeline

- 2024-03-23: Case opened with ZDI

- 2024-04-25: Case reviewed by ZDI

- 2024-04-25: Case disclosed to the vendor

- 2024-05-02: Fix published by the vendor

- 2024-06-20: Coordinated public release of ZDI advisory

Background Stuff

Before we dive into the juicy details, I'm going to cover some background information to provide some additional context to the vulnerability. Feel free to skip this if you're already familiar with the networking subsystem and TIPC basics!

net/ Basics

So to kick things off lets try and give a bit of background on some of the networking subsystem fundamentals, as this is where the TIPC protocol is implemented!

I say try, because this subsystem is pretty complex and there's a lot of ground to cover. But in short, the networking subsystem does what it says on the tin: provides networking capability to the kernel. And it does it in way which is modular and extensible, providing a core API to implement various networking devices, protocols and interfaces.

struct sk_buff

One of the fundamental structures that the kernel provides is struct sk_buff which represents a network packet and its status. The structure is created when a kernel packet is received, either from the user space or from the network interface.[3]

The kernel documentation honestly does a great job unpacking this rather complicated structure, so I'd recommend checking that out (up to the checksum section at least).

Essentially, struct sk_buff itself stores various metadata and the actual packet data is stored in associated buffers. A large part of the complexity surrounding the structure is how these buffers, and the relevant pointers to them, are accessed and manipulated.

struct skb_shared_info

One of the features baked into this core API is packet fragmentation, the idea that a protocol's data may be split across several packets - so we have a situation where some data is fragmented across the data buffers of several struct sk_buffs.

This is where struct skb_shared_info comes in!

/* This data is invariant across clones and lives at

* the end of the header data, ie. at skb->end.

*/

struct skb_shared_info {

__u8 flags;

__u8 meta_len;

__u8 nr_frags;

__u8 tx_flags;

unsigned short gso_size;

/* Warning: this field is not always filled in (UFO)! */

unsigned short gso_segs;

struct sk_buff *frag_list;

struct skb_shared_hwtstamps hwtstamps;

unsigned int gso_type;

u32 tskey;

/*

* Warning : all fields before dataref are cleared in __alloc_skb()

*/

atomic_t dataref;

unsigned int xdp_frags_size;

/* Intermediate layers must ensure that destructor_arg

* remains valid until skb destructor */

void * destructor_arg;

/* must be last field, see pskb_expand_head() */

skb_frag_t frags[MAX_SKB_FRAGS];

};Among other things, this allows a packet to keep track of its fragments! Relevant to us is frag_list, used to link struct sk_buff headers together for reassembly.

TIPC Primer

Transparent Inter Process Communication (TIPC) is an IPC mechanism designed for intra-cluster communication, originating from Ericsson where it has been used in carrier grade cluster applications for many years. Cluster topology is managed around the concept of nodes and the links between these nodes.

TIPC communications are done over a "bearer", which is a TIPC abstraction of a network interface. A "media" is a bearer type, of which there are four currently supported: Ethernet, Infiniband, UDP/IPv4 and UDP/IPv6.

A local attacker is able to set up a UDP bearer as an unprivileged user via netlink, as demonstrated by bl@sty during his work on CVE-2021-43267[1]. However, a remote attacker is restricted by whatever bearers are already set up on a system.

TIPC messages have their own header, of which there are several formats outlined in the specification[2]. A common theme is the concept of message "user" which defines their purpose (see "Figure 4: TIPC Message Types"[2]) and can be used to infer the format of the TIPC message.

There is a handshake to establish a link between nodes (see "Link Creation"[2]). An established link is required to reach the vulnerable code. This essentially involves sending three messages to: advertise the node, reset the state and then set the state.

- Exploiting CVE-2021-43267

- TIPC Protocol Documentation

- https://linux-kernel-labs.github.io/refs/heads/master/labs/networking.html#linux-networking

- https://docs.kernel.org/networking/skbuff.html

The Vulnerability

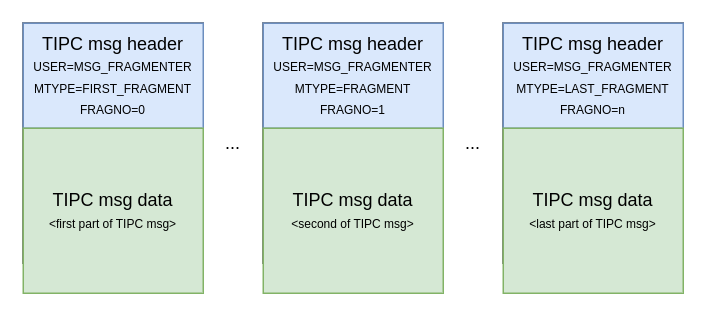

The TIPC protocol features message fragmentation, where a single TIPC message can be split into fragments and sent to its destination via several packets:

When a message is longer than the identified MTU of the link it will use, it is split up in fragments, each being sent in separate packets to the destination node. Each fragment is wrapped into a packet headed by an TIPC internal header [...] The User field of the header is set to MSG_FRAGMENTER, and each fragment is assigned a Fragment Number relative to the first fragment of the message. Each fragmented message is also assigned a Fragmented Message Number, to be present in all fragments. [...] At reception the fragments are reassembled so that the original message is recreated, and then delivered upwards to the destination port. [1]

So essentially, each fragment is wrapped up in a TIPC fragment message (a message with the MSG_FRAGMENTER user). Each of these fragment messages will provide metadata in its header, such as the fragment number, so that the fragment within can be reassembled in the right order on the receiving end.

Exploring The Call Trace

Let's take a look at the kernel call trace for a MSG_FRAGMENTER message being received by a TIPC UDP bearer. This gives us a bit of context about how the TIPC networking stack handles incoming packets:

#0 tipc_link_input+0x41b/0x850 net/tipc/link.c:1339

#1 tipc_link_rcv+0x77a/0x2dc0 net/tipc/link.c:1839

#2 tipc_rcv+0x519/0x3030 net/tipc/node.c:2159

#3 tipc_udp_recv+0x745/0x930 net/tipc/udp_media.c:421

#4 udp_queue_rcv_one_skb+0xe76/0x19b0 net/ipv4/udp.c:2113

#5 udp_queue_rcv_skb+0x136/0xa60 net/ipv4/udp.c:2191

#5 & #4 show the underlying UDP networking stack stuff. #3 is where TIPC first receives inbound TIPC-over-UDP messages, which does some basic bearer level checks before handing the skb over to #2, tipc_rcv().

After bearer level checks, all inbound TIPC packets are processed by #2, tipc_rcv(). This involves sanity checks on TIPC header values and using a combination of message user and link state to figure out how the packet is going to be processed.

A valid MSG_FRAGMENTER message is received by #1, tipc_link_rcv():

int tipc_link_rcv(struct tipc_link *l, struct sk_buff *skb,

struct sk_buff_head *xmitq)

{

struct sk_buff_head *defq = &l->deferdq;

struct tipc_msg *hdr = buf_msg(skb);

u16 seqno, rcv_nxt, win_lim;

int released = 0;

int rc = 0;

/* Verify and update link state */

if (unlikely(msg_user(hdr) == LINK_PROTOCOL))

return tipc_link_proto_rcv(l, skb, xmitq);

/* Don't send probe at next timeout expiration */

l->silent_intv_cnt = 0;

do {

hdr = buf_msg(skb);

seqno = msg_seqno(hdr); [0]

rcv_nxt = l->rcv_nxt; [1]

win_lim = rcv_nxt + TIPC_MAX_LINK_WIN;

if (unlikely(!link_is_up(l))) {

if (l->state == LINK_ESTABLISHING)

rc = TIPC_LINK_UP_EVT;

kfree_skb(skb);

break;

}

/* Drop if outside receive window */

if (unlikely(less(seqno, rcv_nxt) || more(seqno, win_lim))) { [2]

l->stats.duplicates++;

kfree_skb(skb);

break;

}

released += tipc_link_advance_transmq(l, l, msg_ack(hdr), 0,

NULL, NULL, NULL, NULL);

/* Defer delivery if sequence gap */

if (unlikely(seqno != rcv_nxt)) { [3]

if (!__tipc_skb_queue_sorted(defq, seqno, skb))

l->stats.duplicates++;

rc |= tipc_link_build_nack_msg(l, xmitq);

break;

}

/* Deliver packet */

l->rcv_nxt++;

l->stats.recv_pkts++;

if (unlikely(msg_user(hdr) == TUNNEL_PROTOCOL))

rc |= tipc_link_tnl_rcv(l, skb, l->inputq);

else if (!tipc_data_input(l, skb, l->inputq))

rc |= tipc_link_input(l, skb, l->inputq, &l->reasm_buf); [5]

if (unlikely(++l->rcv_unacked >= TIPC_MIN_LINK_WIN))

rc |= tipc_link_build_state_msg(l, xmitq);

if (unlikely(rc & ~TIPC_LINK_SND_STATE))

break;

} while ((skb = __tipc_skb_dequeue(defq, l->rcv_nxt))); [4]

/* Forward queues and wake up waiting users */

if (released) {

tipc_link_update_cwin(l, released, 0);

tipc_link_advance_backlog(l, xmitq);

if (unlikely(!skb_queue_empty(&l->wakeupq)))

link_prepare_wakeup(l);

}

return rc;

}

tipc_link_rcv() uses the sequence number, pulled from the TIPC message header [0], to determine the order in which to process the incoming skbs. It uses struct tipc_link to manage the link state, including what seqno it's expecting next [1].

Out of order packets are either dropped [2] or added to the defer queue, defq, for later [3] [4]. When the correct seqno is hit, it will do some checks to see how to process it. When the user is MSG_FRAGMENTER, the packet is passed to #0 tipc_link_input() [5].

tipc_link_input(), #0, processes the packet depending on the user:

static int tipc_link_input(struct tipc_link *l, struct sk_buff *skb,

struct sk_buff_head *inputq,

struct sk_buff **reasm_skb)

// snip

} else if (usr == MSG_FRAGMENTER) {

l->stats.recv_fragments++;

if (tipc_buf_append(reasm_skb, &skb)) {

l->stats.recv_fragmented++;

tipc_data_input(l, skb, inputq);

} else if (!*reasm_skb && !link_is_bc_rcvlink(l)) {

pr_warn_ratelimited("Unable to build fragment list\n");

return tipc_link_fsm_evt(l, LINK_FAILURE_EVT);

}

return 0;

} // snip

kfree_skb(skb);

return 0;

}

Examining tipc_buf_append()

This function is the root cause of the vulnerability. tipc_buf_append() is used to append the buffers containing message fragments, in order to reassemble the original message:

/* tipc_buf_append(): Append a buffer to the fragment list of another buffer

* @*headbuf: in: NULL for first frag, otherwise value returned from prev call

* out: set when successful non-complete reassembly, otherwise NULL

* @*buf: in: the buffer to append. Always defined

* out: head buf after successful complete reassembly, otherwise NULL

* Returns 1 when reassembly complete, otherwise 0

*/

int tipc_buf_append(struct sk_buff **headbuf, struct sk_buff **buf)

{

struct sk_buff *head = *headbuf;

struct sk_buff *frag = *buf;

struct sk_buff *tail = NULL;

struct tipc_msg *msg;

u32 fragid;

int delta;

bool headstolen;

if (!frag)

goto err;

msg = buf_msg(frag);

fragid = msg_type(msg); [0]

frag->next = NULL;

skb_pull(frag, msg_hdr_sz(msg));

if (fragid == FIRST_FRAGMENT) {

if (unlikely(head))

goto err;

*buf = NULL;

if (skb_has_frag_list(frag) && __skb_linearize(frag))

goto err;

frag = skb_unshare(frag, GFP_ATOMIC);

if (unlikely(!frag))

goto err;

head = *headbuf = frag; [1]

TIPC_SKB_CB(head)->tail = NULL;

return 0;

}

if (!head)

goto err;

if (skb_try_coalesce(head, frag, &headstolen, &delta)) { [2]

kfree_skb_partial(frag, headstolen);

} else { [3]

tail = TIPC_SKB_CB(head)->tail;

if (!skb_has_frag_list(head))

skb_shinfo(head)->frag_list = frag;

else

tail->next = frag;

head->truesize += frag->truesize;

head->data_len += frag->len;

head->len += frag->len;

TIPC_SKB_CB(head)->tail = frag;

}

if (fragid == LAST_FRAGMENT) {

TIPC_SKB_CB(head)->validated = 0;

if (unlikely(!tipc_msg_validate(&head))) [4]

goto err; [5]

*buf = head;

TIPC_SKB_CB(head)->tail = NULL;

*headbuf = NULL;

return 1;

}

*buf = NULL;

return 0;

err:

kfree_skb(*buf); [6]

kfree_skb(*headbuf); [7]

*buf = *headbuf = NULL;

return 0;

}

Walking through a typical case, when the first fragment is received tipc_buf_append() is called with *headbuf == NULL & *buf pointing to the packet buffer of the first fragment. Note the fragment id (first, last or other) is stored in the TIPC header [0].

For the first fragment, some checks are done and this buffer is used to initialise heabuf [1] and it returns. For subsequent fragments in this sequence, heabuf is now initialised when tipc_buf_append() is called. These packets are then either coalesced into the head buffer [2] or added to its the frag_list [3].

Finally when the LAST_FRAGMENT is processed, added to the chain, the header of the initially fragmented packet is validated [4]. If you recall, the fragmented message is stored within the MSG_FRAGMENTER messages, so will have its own header that hasn't been validated yet.

Notably, if this fails (e.g. we intentionally scuff up the header of the fragmented message), both the buffers are dropped [5] [6]. At this point buf points to the last fragment and headbuf points to the head buffer (the first fragment). It is possible for buf to be in the frag_list of headbuf at this point as we've seen.

However, kfree_skb() isn't a simple kfree() wrapper, due to the complexity of struct sk_buff. It involves quite a bit of cleanup, including cleaning up the fragments reference by the frag_list ... you can probably see where this is going!

The last fragment, buf, is freed [6]. Then, the head buffer is freed [7] whereby its frag_list is iterated for cleanup, leading to a use-after-free, as the final fragment has just been freed prior to this call [6]!

We can see this buy exploring the rest of the call trace when we trigger the bug:

[ 48.900496] ==================================================================

[ 48.901414] BUG: KASAN: slab-use-after-free in kfree_skb_list_reason+0x549/0x5c0

[ 48.902395] Read of size 8 at addr ffff88800927c900 by task syz_test/207

[ 48.903256]

[ 48.903450] CPU: 1 PID: 207 Comm: syz_test Not tainted 6.7.4-gd09175322cfa-dirty #6

[ 48.904221] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.15.0-1 04/01/2014

[ 48.905046] Call Trace:

[ 48.905306] <IRQ>

[ 48.905490] dump_stack_lvl+0x72/0xa0

[ 48.905787] print_report+0xcc/0x620

[ 48.906736] kasan_report+0xb0/0xe0

[ 48.907096] kfree_skb_list_reason+0x549/0x5c0

[ 48.909613] skb_release_data.isra.0+0x4fd/0x850

[ 48.909997] kfree_skb_reason+0xf4/0x380

[ 48.910171] tipc_buf_append+0x3e4/0xad0

The site that triggers KASAN is when the fragmented buffer list is iterated during kfree_skb_list_reason(). It is passed the frag_list of the head buffer in skb_release_data() [0]:

static void skb_release_data(struct sk_buff *skb, enum skb_drop_reason reason,

bool napi_safe)

{

struct skb_shared_info *shinfo = skb_shinfo(skb);

int i;

// snip

free_head:

if (shinfo->frag_list)

kfree_skb_list_reason(shinfo->frag_list, reason); [0]

We can then see the KASAN trigger in kfree_skb_list_reason() here [1]:

void __fix_address

kfree_skb_list_reason(struct sk_buff *segs, enum skb_drop_reason reason)

{

struct skb_free_array sa;

sa.skb_count = 0;

while (segs) {

struct sk_buff *next = segs->next; [1]

if (__kfree_skb_reason(segs, reason)) {

skb_poison_list(segs);

kfree_skb_add_bulk(segs, &sa, reason);

}

segs = next;

}

if (sa.skb_count)

kmem_cache_free_bulk(skbuff_cache, sa.skb_count, sa.skb_array);

}

Variations

There's a couple of variations to this vulnerability which are worth mentioning. First of all, the vulnerable path can also be reached in a very similar manner via TUNNEL_PROTOCOL messages, as seen in this call trace:

kfree_skb_reason+0xf4/0x380 net/core/skbuff.c:1108

kfree_skb include/linux/skbuff.h:1234 [inline]

tipc_buf_append+0x3ce/0xb50 net/tipc/msg.c:186

tipc_link_tnl_rcv net/tipc/link.c:1398 [inline]

tipc_link_rcv+0x1a89/0x2dc0 net/tipc/link.c:1837

tipc_rcv+0x1220/0x3030 net/tipc/node.c:2173

tipc_udp_recv+0x745/0x930 net/tipc/udp_media.c:421

Additionally, some eagle eyed readers may also have noticed there's another way to trigger the use-after-free within tipc_buf_append():

int tipc_buf_append(struct sk_buff **headbuf, struct sk_buff **buf)

{

// snip

if (skb_try_coalesce(head, frag, &headstolen, &delta)) {

kfree_skb_partial(frag, headstolen); [0]

} else {

tail = TIPC_SKB_CB(head)->tail;

if (!skb_has_frag_list(head))

skb_shinfo(head)->frag_list = frag;

else

tail->next = frag;

head->truesize += frag->truesize;

head->data_len += frag->len;

head->len += frag->len;

TIPC_SKB_CB(head)->tail = frag;

}

if (fragid == LAST_FRAGMENT) {

TIPC_SKB_CB(head)->validated = 0;

if (unlikely(!tipc_msg_validate(&head)))

goto err;

*buf = head;

TIPC_SKB_CB(head)->tail = NULL;

*headbuf = NULL;

return 1;

}

*buf = NULL;

return 0;

err:

kfree_skb(*buf); [1]

kfree_skb(*headbuf); [2]

*buf = *headbuf = NULL;

return 0;

}The initial free can occur at either site [0] or [1]. We've covered the latter case, but if the last fragment was coalesced, then the initial free occurs at [0] instead.

Exploitation

Unfortunately I haven't had the time to work on putting together an exploit for this vulnerability, though I'd love to set some time aside in the future. Sorry! :(

From an LPE perspective, the use-after-free of a struct sk_buff provides a pretty nice primitive due to its complexity and usage. There's been some nice write-ups in the past making good use of the structure for LPE, so check those out if interested![1][2]

The RCE side of things is more opaque and something I'm really keen to explore more. Two major roadblocks for Linux kernel RCE are: KASLR and the drastically reduced surface for heap fengshui and generally affecting device state.

At least on the latter, we have some nice flexibility with this vulnerability. We have some control over the affected caches via our TIPC messages. The defer queue could potentially be used to introduce delays and control when objects are freed. Who knows!

- CVE-2021-0920: Android sk_buff use-after-free in Linux

- Four Bytes of Power: Exploiting CVE-2021-26708 in the Linux kernel

Here are some posts on other TIPC related bugs and stuff for interested readers:

- CVE-2021-43267: Remote Linux Kernel Heap Overflow by @maxpl0it

- Exploiting CVE-2021-43267 by @bl4sty

- CVE-2022-0435: A Remote Stack Overflow in The Linux Kernel by me

Fix + Remediation

---

net/tipc/msg.c | 6 +++++-

1 file changed, 5 insertions(+), 1 deletion(-)

diff --git a/net/tipc/msg.c b/net/tipc/msg.c

index 5c9fd4791c4ba1..9a6e9bcbf69402 100644

--- a/net/tipc/msg.c

+++ b/net/tipc/msg.c

@@ -156,6 +156,11 @@ int tipc_buf_append(struct sk_buff **headbuf, struct sk_buff **buf)

if (!head)

goto err;

+ /* Either the input skb ownership is transferred to headskb

+ * or the input skb is freed, clear the reference to avoid

+ * bad access on error path.

+ */

+ *buf = NULL;

if (skb_try_coalesce(head, frag, &headstolen, &delta)) {

kfree_skb_partial(frag, headstolen);

} else {

@@ -179,7 +184,6 @@ int tipc_buf_append(struct sk_buff **headbuf, struct sk_buff **buf)

*headbuf = NULL;

return 1;

}

- *buf = NULL;

return 0;

err:

kfree_skb(*buf);We can see the patch is fairly simple (even if the context is not): the reference to the input skb ( buf ) is cleared before the error case that can cause the UAF. This is because the block handling the fragment coalescing/chaining already does the appropriate cleanup for it via frag (which at this point is also a reference to the input skb).

It's a bit clearer if we provide some more context:

/* Either the input skb ownership is transferred to headskb

* or the input skb is freed, clear the reference to avoid

* bad access on error path.

*/

*buf = NULL;

if (skb_try_coalesce(head, frag, &headstolen, &delta)) { [2]

kfree_skb_partial(frag, headstolen); [3]

} else { [4]

tail = TIPC_SKB_CB(head)->tail;

if (!skb_has_frag_list(head))

skb_shinfo(head)->frag_list = frag;

else

tail->next = frag;

head->truesize += frag->truesize;

head->data_len += frag->len;

head->len += frag->len;

TIPC_SKB_CB(head)->tail = frag;

}

if (fragid == LAST_FRAGMENT) {

TIPC_SKB_CB(head)->validated = 0;

if (unlikely(!tipc_msg_validate(&head)))

goto err;

*buf = head; [0]

TIPC_SKB_CB(head)->tail = NULL;

*headbuf = NULL;

return 1;

}

// before the patch: *buf = NULL; [1]

return 0;

err:

kfree_skb(*buf);

kfree_skb(*headbuf);

*buf = *headbuf = NULL;

return 0;

}So if we recall our vulnerable case, after we've chained our last fragment, if the TIPC header of the fragmented message (which is now assembled) is invalid, we hit the error case at [0]. We then go to err: and cause the UAF, as buf was never cleared at [1].

By the time we reach [2] we know that the input skb is a trailing fragment and is reference by both buf and frag. At this point we don't need the buf reference as the following block handles the input skb appropriately via frag: either it is coalesced into head and freed [3] or it is added to head's frag list at which point head is responsible for it [4].

As a result, we can just clear the unnecessary buf reference before it can cause any trouble. Hopefully that's not too convoluted an explanation for a simple patch!

Remediation

Chances are, as I mentioned up top, unless you're running TIPC you're all good! However, if you are, or want to be extra safe, prior to a patch being made available, the TIPC module can be disabled from loading if not in use:

$ lsmod | grep tipcwill let you know if the module is currently loaded,modprobe -r tipcmay allow you to unload the module if loaded, however you may need to reboot your system$ echo "install tipc /bin/true" >> /etc/modprobe.d/disable-tipc.confwill prevent the module from being loaded, which is a good idea if you have no reason to use it

Wrapup

As always, thank you for surviving up until this point! This research has been super fun, hopefully this has been an interesting read and not missing too much context; I appreciate it's a particularly complex topic with lots of moving parts. Also, this was somewhat rushed due to having a lot going on at the moment, so I apologise for any drop in quality!

I'd like to thank ZDI and the Linux kernel maintainers for the work involved in getting this vulnerability disclosed and patched!

There's quite a few things I'd love to do in follow-up to this post, if I can only find the time! I'd be happy to go into more detail on the discovery process and working with syzkaller, I really want to play around with exploitation and I also think it'd be neat to expand the Linternals blog series with some networking content!

In the meanwhile, if you're interested in modifying syzkaller, checkout @notselwyn's post on "Tickling ksmbd: fuzzing SMB in the Linux kernel", I found it super helpful!

Feel free to @me if you have any questions, suggestions or corrections :)

exit(0);